Entropy is a fundamental concept in thermodynamics that measures the degree of disorder or randomness in a system. It plays a crucial role in understanding the behavior of systems, ranging from chemical reactions to the universe as a whole. While entropy might appear to be a complex and abstract concept, it holds a fascinating array of enigmatic facts that shed light on the nature of the world around us.

In this article, we will delve into 16 intriguing facts about entropy that will challenge your understanding of the concept. From its connection to the arrow of time to its role in determining the efficiency of heat engines, these facts will not only expand your knowledge of entropy but also ignite your curiosity about the hidden complexities of the universe. So, grab a cup of coffee and get ready to explore the mysterious world of entropy!

Key Takeaways:

- Entropy measures disorder and always increases in isolated systems, impacting energy efficiency and the arrow of time. It’s crucial for understanding the universe’s fundamental principles.

- Entropy applies to physical and informational systems, influences probability, and is a subject of ongoing research across various scientific fields. It’s a captivating and complex concept with wide-ranging implications.

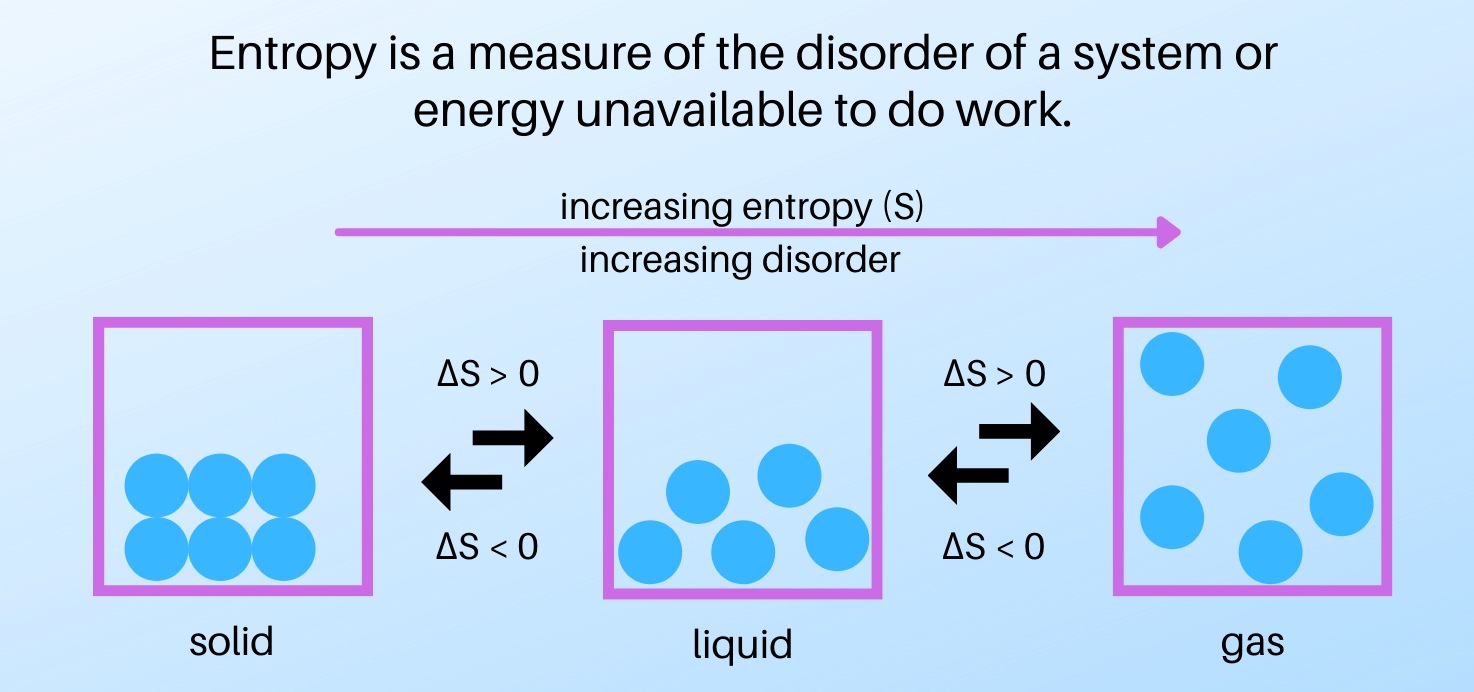

Entropy is a measure of disorder.

Entropy is a fundamental concept in thermodynamics that quantifies the amount of disorder or randomness in a system. It provides a way to understand the behavior of energy and the direction of processes.

Entropy always increases in isolated systems.

One of the key principles of entropy is that it tends to increase over time in isolated systems. This concept is known as the second law of thermodynamics, which states that the entropy of a closed system will either remain constant or increase.

Entropy can never be negative.

Entropy is always a positive value or zero, but it can never be negative. This is because the concept of disorder or randomness is inherently non-negative.

Entropy affects the efficiency of energy conversions.

The increase in entropy during energy conversions, such as heat transfer or chemical reactions, leads to a loss of useful energy. This loss of energy is known as entropy production, which impacts the overall efficiency of the process.

Entropy explains why we cannot have 100% efficient engines.

According to the second law of thermodynamics, it is impossible to build an engine with 100% efficiency. This is because a certain amount of energy will always be wasted as heat, increasing the overall entropy of the system.

Entropy is involved in phase transitions.

During phase transitions, such as melting or vaporization, the entropy of a substance changes. These transitions are characterized by a significant increase in entropy due to the formation of a more disordered or random arrangement of particles.

Entropy can be calculated using the Boltzmann formula.

The entropy of a system can be calculated using the Boltzmann formula, which relates entropy to the number of possible microstates of a system. It is given by the equation S = k ln(W), where S is the entropy, k is Boltzmann’s constant, and W is the number of microstates.

Entropy plays a crucial role in information theory.

In information theory, entropy is used to quantify the amount of uncertainty or randomness in a message or signal. It provides a measure of the average amount of information required to encode or transmit the message.

Entropy applies to both physical and informational systems.

Entropy is a versatile concept that applies not only to physical systems but also to informational systems. It can be used to analyze data compression, encryption, and the overall complexity of information.

Entropy has connections to the arrow of time.

The increase in entropy over time is often associated with the direction of the arrow of time. The second law of thermodynamics implies that systems tend to evolve towards a state of higher entropy, leading to the perception of time progressing in a specific direction.

Entropy is affected by the number of particles and their distribution.

The entropy of a system is influenced by the number of particles it contains and how these particles are distributed among different states or energy levels. More possible arrangements or configurations lead to higher entropy.

Entropy can be used to forecast the probability of events.

In statistical mechanics, entropy is related to the probability of different states occurring. By analyzing the entropy of a system, it is possible to make predictions about the likelihood of specific events or outcomes.

Entropy can decrease locally in systems.

Although entropy tends to increase in isolated systems, it is possible for entropy to decrease locally. However, any decrease in entropy in one part of a system will always be compensated by an even larger increase in entropy elsewhere.

Entropy is reversible in certain idealized processes.

In some idealized processes, such as reversible heat transfer or adiabatic compression, entropy remains constant. However, such processes are not feasible in practice, as they require infinite time or idealized conditions that are difficult to achieve.

Entropy is related to the concept of information entropy.

The concept of entropy in thermodynamics shares similarities with the concept of information entropy in computer science and communication theory. Both involve quantifying the amount of uncertainty or randomness in a system.

Entropy is a subject of ongoing research.

Entropy continues to be an active area of research in various fields, including physics, chemistry, information theory, and complexity science. Scientists are exploring its applications in different domains and expanding our understanding of this intriguing concept.

These 16 enigmatic facts about entropy provide a glimpse into the fascinating world of thermodynamics and information theory. Understanding entropy is crucial for comprehending the behavior of energy, the arrow of time, and the fundamental principles governing our universe. Whether it’s the increase in disorder, the impact on energy conversions, or its role in information theory, entropy remains a captivating and complex concept that continues to spark scientific inquiry.

Conclusion

In conclusion, entropy is a fascinating and complex concept in the field of chemistry. It plays a crucial role in understanding the behavior and transformations of matter. From its connection to the randomness and disorder in a system to its relationship with energy and the laws of thermodynamics, entropy helps us unravel the mysteries of the physical world.

By exploring the enigmatic facts about entropy, we can grasp its profound implications in various fields, from physics to biology. It reminds us that even in seemingly chaotic systems, there is underlying order and predictable behavior.

As we continue to delve into the intricacies of entropy, we open doors to new discoveries and advancements in scientific knowledge. Embracing the scientific methods that guide our understanding of entropy can lead to breakthroughs that shape our world and improve our lives.

FAQs

1. What is entropy?

Entropy is a measure of the randomness or disorder in a system. In thermodynamics, it is a fundamental concept related to the flow of heat and energy.

2. How is entropy related to the laws of thermodynamics?

Entropy is closely connected to the second law of thermodynamics, which states that the entropy of an isolated system tends to increase over time.

3. Why does entropy increase in a closed system?

The increase in entropy occurs due to the tendency of energy to disperse and the increase in the number of microstates associated with a system. As time progresses, the system becomes more spread out and disordered.

4. Can entropy be reversed?

While it is theoretically possible to decrease entropy in a localized system, the overall entropy of the universe will always increase. This is known as the “arrow of time” or the “irreversibility of natural processes.

5. How is entropy related to statistical mechanics?

Statistical mechanics provides a microscopic explanation of entropy, relating it to the distribution and arrangement of particles in a system.

6. Is entropy always a disadvantage?

Entropy is not necessarily a disadvantage but rather a natural consequence of how energy and matter behave. It is often associated with the dissipation of useful energy, but it also allows for new possibilities and the spontaneity of chemical reactions.

7. Can entropy be measured?

Entropy can be determined by analyzing the energy distribution and the number of possible states a system can occupy. However, it is a relative value, and its direct measurement is challenging.

8. Does entropy have practical applications?

Entropy has numerous practical applications in various fields, including energy conversion processes, information theory, and climate science.

9. How does entropy relate to the concept of order?

Entropy is often associated with disorder, but it is more accurately related to the distribution and arrangement of particles in a system rather than the concept of orderliness.

10. Is there a connection between entropy and human systems?

While entropy finds its origins in the study of physical systems, the concept has been applied to various other disciplines, such as economics, sociology, and information theory, to understand the behavior and organization of human systems.

Was this page helpful?

Our commitment to delivering trustworthy and engaging content is at the heart of what we do. Each fact on our site is contributed by real users like you, bringing a wealth of diverse insights and information. To ensure the highest standards of accuracy and reliability, our dedicated editors meticulously review each submission. This process guarantees that the facts we share are not only fascinating but also credible. Trust in our commitment to quality and authenticity as you explore and learn with us.