Why do some people feel cold more easily than others? This question has puzzled many. Several factors play a role in how we perceive temperature. Body fat percentage can influence how well we retain heat. Metabolism also affects our internal thermostat. Gender differences show that women often feel colder than men due to hormonal variations. Age is another factor; older adults tend to have slower circulation, making them feel colder. Medical conditions like hypothyroidism can make someone more sensitive to cold. Lifestyle choices such as diet and exercise habits also contribute. Understanding these factors can help us better manage our comfort levels.

What is Underfitting?

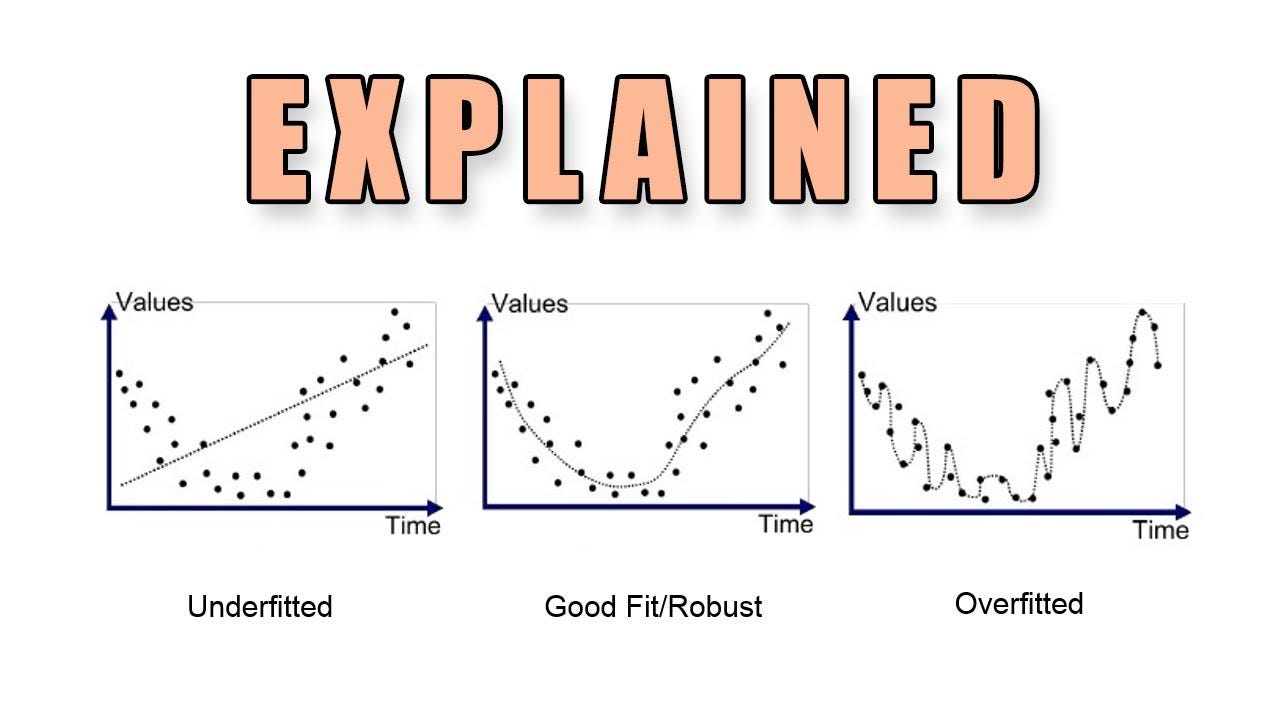

Underfitting is a common problem in machine learning where a model fails to capture the underlying trend of the data. This results in poor performance on both training and test datasets. Let's dive into some interesting facts about underfitting.

-

Underfitting occurs when a model is too simple. This means it doesn't have enough parameters to capture the complexity of the data.

-

High bias is a sign of underfitting. Bias refers to the error introduced by approximating a real-world problem with a simplified model.

-

Underfitting can be caused by insufficient training. If a model doesn't have enough data to learn from, it won't perform well.

-

Using too few features can lead to underfitting. Features are the input variables used by the model to make predictions.

-

Underfitting is the opposite of overfitting. Overfitting happens when a model is too complex and captures noise in the data.

-

Regularization can cause underfitting. Regularization techniques like L1 and L2 add penalties to the model, which can sometimes make it too simple.

-

Underfitting results in high training error. This means the model performs poorly even on the training data.

-

Decision trees can underfit if they are too shallow. A shallow tree doesn't have enough depth to capture the complexity of the data.

-

Linear models often underfit non-linear data. Linear models assume a straight-line relationship between input and output, which isn't always the case.

-

Underfitting can be detected using learning curves. Learning curves plot training and validation error over time, helping to identify underfitting.

Causes of Underfitting

Understanding the causes of underfitting can help in preventing it. Here are some common reasons why underfitting occurs.

-

Insufficient model complexity. A model that is too simple won't capture the nuances in the data.

-

Too much regularization. While regularization helps prevent overfitting, too much of it can lead to underfitting.

-

Poor feature selection. Choosing the wrong features can make it difficult for the model to learn.

-

Inadequate training data. Without enough data, the model can't learn effectively.

-

Incorrect model choice. Using a model that doesn't fit the data well can result in underfitting.

-

Low learning rate. A learning rate that is too low can prevent the model from learning quickly enough.

-

High bias algorithms. Algorithms with high bias, like linear regression, are more prone to underfitting.

-

Ignoring important variables. Leaving out key variables can make the model too simplistic.

-

Data preprocessing errors. Mistakes in data preprocessing can lead to underfitting.

-

Simplistic assumptions. Assuming a simple relationship between variables can cause underfitting.

How to Prevent Underfitting

Preventing underfitting involves making the model more complex and ensuring it has enough data to learn from. Here are some strategies to prevent underfitting.

-

Increase model complexity. Adding more parameters can help the model capture the data's complexity.

-

Use more features. Including additional relevant features can improve model performance.

-

Reduce regularization. Lowering regularization penalties can make the model more flexible.

-

Use a more complex model. Switching to a more complex model can help prevent underfitting.

-

Increase training data. More data can help the model learn better.

-

Tune hyperparameters. Adjusting hyperparameters can improve model performance.

-

Use ensemble methods. Combining multiple models can reduce underfitting.

-

Cross-validation. Using cross-validation can help in selecting the right model and parameters.

-

Feature engineering. Creating new features can help the model learn better.

-

Data augmentation. Generating additional data can improve model training.

Examples of Underfitting

Understanding real-world examples can help in recognizing and addressing underfitting. Here are some common scenarios where underfitting occurs.

-

Predicting house prices with linear regression. If the relationship between features and prices is non-linear, a linear model will underfit.

-

Classifying images with a simple neural network. A network with too few layers won't capture the complexity of image data.

-

Forecasting stock prices with a basic model. Stock prices are influenced by many factors, and a simple model won't capture all of them.

The Final Word on Underfit

Underfit is more than just a term in machine learning. It’s a crucial concept that can make or break your model’s performance. When a model is underfit, it means it’s too simple to capture the underlying patterns in the data. This can lead to poor predictions and unreliable results. To avoid underfitting, ensure your model is complex enough to learn from the data but not so complex that it overfits. Regularly check your model’s performance using validation data and adjust parameters as needed. Understanding underfit helps in creating more accurate, reliable models that can make better predictions. So, keep an eye on your model’s complexity and always strive for that sweet spot where it’s just right. Happy modeling!

Was this page helpful?

Our commitment to delivering trustworthy and engaging content is at the heart of what we do. Each fact on our site is contributed by real users like you, bringing a wealth of diverse insights and information. To ensure the highest standards of accuracy and reliability, our dedicated editors meticulously review each submission. This process guarantees that the facts we share are not only fascinating but also credible. Trust in our commitment to quality and authenticity as you explore and learn with us.