Entropy is a fascinating concept that plays a crucial role in the field of physics. It is the measure of disorder or randomness in a system and has profound implications for understanding the behavior of various phenomena in our universe. From the fundamental laws of thermodynamics to the functioning of engines and even the evolution of our universe, entropy holds the key to unraveling the mysteries of nature. In this article, we will delve into 16 extraordinary facts about entropy that will not only expand your knowledge but also leave you in awe of the intricate workings of the world around us. So, buckle up and get ready to dive into the captivating world of entropy.

Key Takeaways:

- Entropy measures disorder and randomness in the universe, influencing everything from black holes to biological systems. It’s like the universe’s natural tendency to become messier over time.

- Entropy is crucial for understanding how things work in the universe, from communication systems to the eventual fate of the cosmos. It’s like a key to unlocking the secrets of the universe’s inner workings.

An Introduction to Entropy

Entropy is a fundamental concept in physics that measures the level of disorder or randomness in a system. It plays a crucial role in thermodynamics, information theory, and statistical mechanics.

Entropy and the Second Law of Thermodynamics

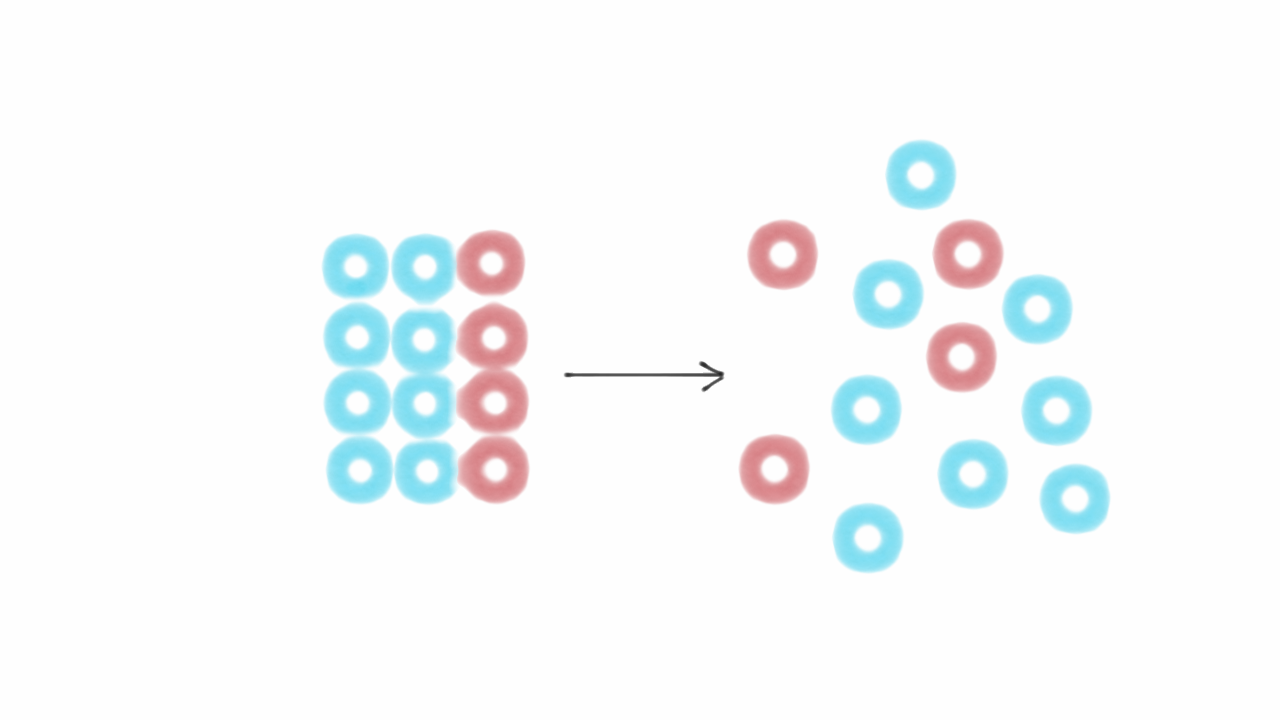

The second law of thermodynamics states that the entropy of an isolated system always increases over time. This law provides a directionality to physical processes, leading to a natural flow towards equilibrium.

The Boltzmann Formula

Entropy can be mathematically quantified using the Boltzmann formula: S = k ln W, where S represents entropy, k is Boltzmann’s constant, and W is the number of microstates corresponding to a given macrostate.

Entropic Arrow of Time

Entropy is intimately connected to the concept of the arrow of time. As time progresses, entropy tends to increase, indicating an irreversible flow of events from a state of lower disorder to higher disorder.

Entropy and Information Theory

In information theory, entropy represents the amount of uncertainty or randomness in a message or data set. It quantifies the average amount of information required to describe or predict an outcome.

Maximum Entropy Principle

The maximum entropy principle states that when limited information is available about a system, the distribution with the highest entropy should be considered as the most likely distribution.

Entropy and the Black Hole Information Paradox

The study of black holes has led to the famous black hole information paradox, which involves the question of whether information can be completely lost in a black hole or if it is somehow encoded in the event horizon’s entropy.

Entropy and the Universe

According to cosmology, the entropy of the universe tends to increase as it expands. This is closely related to the second law of thermodynamics and the eventual heat death of the universe.

Entropy and Statistical Mechanics

In statistical mechanics, entropy is a measure of the number of possible microscopic configurations that correspond to a given macroscopic state. It relates to the concept of disorder and the probability distribution of particles.

Entropy and the Three Laws of Thermodynamics

The three laws of thermodynamics govern the behavior of energy in physical systems. Entropy appears in the second law, which states that the entropy of an isolated system always tends to increase.

Entropy and Communication Systems

In communication systems, entropy represents the average amount of information that can be conveyed per message. Efficient coding schemes aim to minimize redundancy and maximize the transmission of useful information.

Entropy and Biological Systems

Entropy also plays a role in understanding biological systems. Living organisms maintain a low entropy state to carry out essential processes. The process of aging can be linked to an increase in entropy at the molecular level.

Entropy and Disorder in Crystals

Contrary to its association with disorder, entropy also plays a role in understanding the order in crystalline structures. The arrangement of atoms in a crystal lattice corresponds to a lower entropy state compared to a disordered state.

Entropy and the Information Paradox

The information paradox refers to the conflict between deterministic laws of physics and the existence of information. The presence of entropy suggests that information can be lost, leading to ongoing debates within the scientific community.

Entropy and Complexity

The concept of entropy is closely related to complexity. As systems become more complex, their entropy tends to increase as well. Understanding the relationship between entropy and complexity is a topic of ongoing research.

The Entropy of the Universe

The entropy of the universe is ever-increasing, contributing to the gradual decay and disorganization of physical systems. This cosmic entropy has profound implications for the future evolution of our universe.

Understanding the Fascinating World of Entropy

These 16 extraordinary facts about entropy provide just a glimpse into the fascinating world of this fundamental concept. From its role in thermodynamics and information theory to its connection with time and complexity, entropy offers deep insights into the workings of our universe.

Whether you’re exploring the behavior of black holes or studying the intricacies of communication systems, understanding entropy is crucial for comprehending the underlying principles that govern our physical reality.

So dive in, embrace the complexity, and uncover the extraordinary significance of entropy in shaping our understanding of the universe.

Conclusion

In conclusion, entropy is a fascinating concept in physics that plays a crucial role in understanding the universe at both the microscopic and macroscopic levels. These extraordinary facts about entropy highlight its fundamental nature and its impact on various aspects of our lives.

From the tendency for systems to move towards disorder to the relationship between entropy and energy, these facts demonstrate the intricate connection between entropy and the laws of thermodynamics. Entropy not only governs the behavior of physical systems but also plays a vital role in fields such as information theory and cosmology.

Understanding entropy allows us to appreciate the underlying order in the universe while also acknowledging its natural tendency towards disorder. Whether it’s the concept of heat death or the role of entropy in everyday processes, entropy offers a profound perspective on the inner workings of our world.

FAQs

1. What is entropy?

Entropy is a measure of the disorder or randomness in a system. It quantifies the number of ways a system can be arranged without changing its overall properties.

2. How does entropy relate to thermodynamics?

Entropy is closely linked to the laws of thermodynamics. The second law of thermodynamics states that the entropy of an isolated system always increases or remains constant. This law helps explain why natural processes tend towards equilibrium and why some processes are irreversible.

3. Can entropy be decreased?

In an isolated system, the overall entropy can only increase or remain constant. However, it’s possible for localized decreases in entropy to occur, such as when energy is input into a specific region. These localized decreases are always accompanied by a greater increase in entropy elsewhere in the system or the surroundings.

4. What is the concept of heat death?

Heat death is a hypothetical state of the universe where it reaches maximum entropy and thermal equilibrium. At this point, no further energy transfer or useful work can occur, leading to a state of cosmic heat death.

5. How is entropy related to information theory?

In information theory, entropy measures the amount of uncertainty or randomness in a set of data. It quantifies the average amount of information required to describe the data, where higher entropy indicates greater unpredictability.

6. Does entropy have any real-life applications?

Entropy has numerous practical applications, such as in the field of computer science, where it is used for data compression and encryption algorithms. It also plays a vital role in understanding natural processes like diffusion, chemical reactions, and even the behavior of black holes.

Entropy's enigmatic nature leaves many questions unanswered, but our exploration of its extraordinary facts is just the beginning. Delving deeper into entropy's intriguing relationship with the second law of thermodynamics reveals even more surprises. Thermodynamics itself holds mindblowing facts waiting to be uncovered, offering a fascinating journey through the mysteries of energy and matter.

Was this page helpful?

Our commitment to delivering trustworthy and engaging content is at the heart of what we do. Each fact on our site is contributed by real users like you, bringing a wealth of diverse insights and information. To ensure the highest standards of accuracy and reliability, our dedicated editors meticulously review each submission. This process guarantees that the facts we share are not only fascinating but also credible. Trust in our commitment to quality and authenticity as you explore and learn with us.