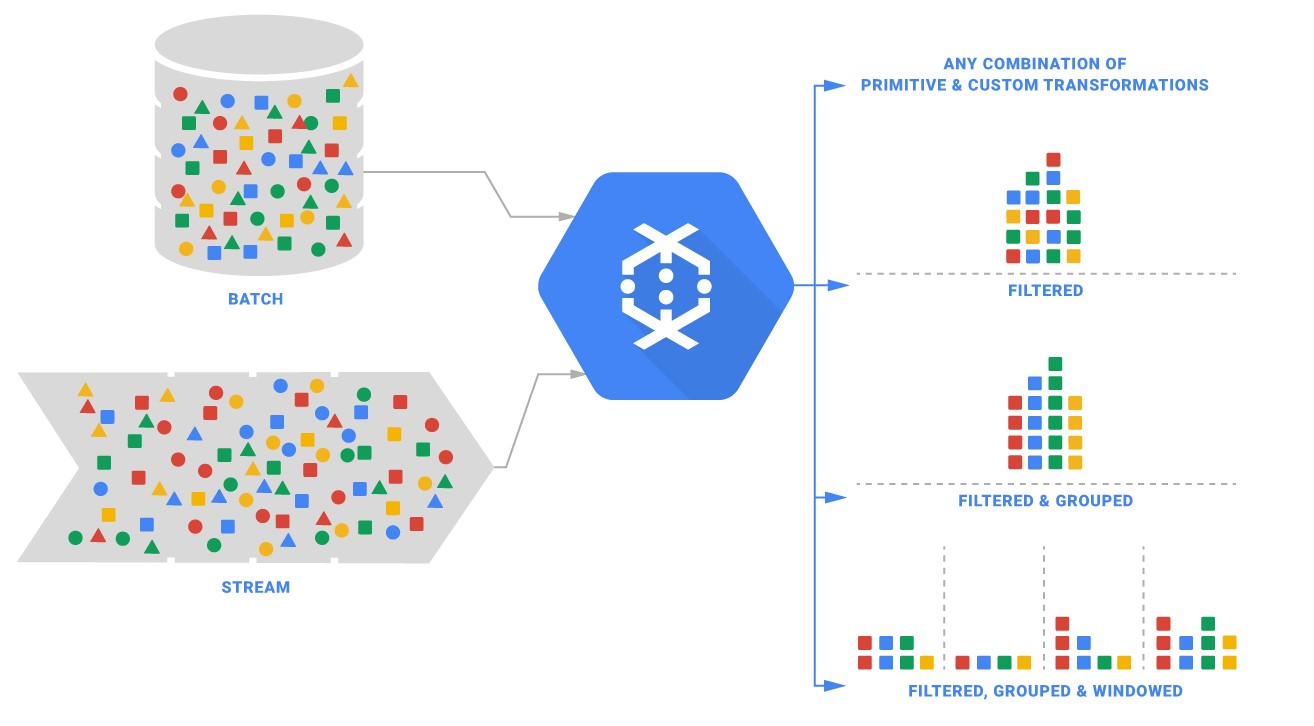

Dataflow is a powerful tool for processing and analyzing large datasets. But what exactly makes it so special? Dataflow allows for real-time data processing, which means you can get insights almost instantly. It's designed to handle massive amounts of data efficiently, making it a go-to for big data projects. With its ability to scale automatically, you don't have to worry about your system crashing under heavy loads. Plus, it integrates seamlessly with other Google Cloud services, offering a robust ecosystem for your data needs. Whether you're dealing with batch processing or streaming data, Dataflow has got you covered. Ready to dive into more details? Let's explore 20 fascinating facts about Dataflow!

What is Dataflow?

Dataflow is a programming model used for processing and analyzing large datasets. It allows for parallel processing, making it efficient for handling big data. Here are some fascinating facts about dataflow:

-

Parallel Processing: Dataflow enables parallel processing, which means multiple data operations can occur simultaneously. This boosts efficiency and speed.

-

Scalability: Dataflow systems can scale horizontally. Adding more machines to the network increases processing power without changing the code.

-

Fault Tolerance: Dataflow models are designed to handle failures gracefully. If a node fails, the system can reroute tasks to other nodes.

-

Data Pipelines: Dataflow is often used to create data pipelines, which automate the flow of data from one process to another.

Historical Background of Dataflow

Understanding the history of dataflow helps appreciate its evolution and significance in modern computing.

-

Origin: The concept of dataflow dates back to the 1960s. It was initially developed for parallel computing.

-

Early Implementations: Early dataflow models were implemented in specialized hardware, which limited their use.

-

Software Evolution: With advancements in software, dataflow models became more accessible and widely used in various applications.

-

Google Dataflow: In 2015, Google introduced its Dataflow service, which revolutionized data processing in the cloud.

Key Components of Dataflow

Dataflow systems consist of several key components that work together to process data efficiently.

-

Nodes: Nodes are the basic units of a dataflow system. Each node performs a specific operation on the data.

-

Edges: Edges connect nodes and represent the flow of data between them.

-

Schedulers: Schedulers manage the execution of tasks within the dataflow system, ensuring optimal resource utilization.

-

Buffers: Buffers temporarily store data between nodes, allowing for smooth data transfer.

Applications of Dataflow

Dataflow models are used in various fields, from scientific research to business analytics.

-

Big Data Analytics: Dataflow is ideal for analyzing large datasets, making it popular in big data analytics.

-

Machine Learning: Dataflow models are used to preprocess data for machine learning algorithms, improving their accuracy and efficiency.

-

Real-time Processing: Dataflow systems can process data in real-time, making them suitable for applications like fraud detection and monitoring.

-

ETL Processes: Extract, Transform, Load (ETL) processes benefit from dataflow models, which automate and streamline data transformation.

Advantages of Dataflow

Dataflow models offer several advantages that make them a preferred choice for data processing.

-

Efficiency: By enabling parallel processing, dataflow models significantly reduce processing time.

-

Flexibility: Dataflow systems can handle various data types and formats, making them versatile.

-

Cost-Effective: Horizontal scalability allows for cost-effective scaling, as additional resources can be added incrementally.

-

Ease of Use: Modern dataflow systems come with user-friendly interfaces and tools, simplifying the creation and management of data pipelines.

Final Thoughts on Dataflow

Dataflow is a game-changer in handling large-scale data processing. It simplifies complex workflows, making it easier to manage and analyze data in real-time. With its ability to handle both batch and stream processing, Dataflow offers flexibility and efficiency. It integrates seamlessly with other Google Cloud services, enhancing its capabilities. The pay-as-you-go pricing model ensures cost-effectiveness, making it accessible for businesses of all sizes. Its robust security features protect sensitive data, providing peace of mind. The user-friendly interface and comprehensive documentation make it easy to get started, even for beginners. Dataflow's scalability ensures it can grow with your business, handling increasing data volumes effortlessly. By leveraging Dataflow, businesses can gain valuable insights, improve decision-making, and stay competitive in today's data-driven world. Embrace Dataflow and unlock the full potential of your data.

Was this page helpful?

Our commitment to delivering trustworthy and engaging content is at the heart of what we do. Each fact on our site is contributed by real users like you, bringing a wealth of diverse insights and information. To ensure the highest standards of accuracy and reliability, our dedicated editors meticulously review each submission. This process guarantees that the facts we share are not only fascinating but also credible. Trust in our commitment to quality and authenticity as you explore and learn with us.