What is a Wasserstein GAN? A Wasserstein GAN (WGAN) is a type of Generative Adversarial Network (GAN) that improves training stability and quality of generated data. Traditional GANs often struggle with training difficulties and mode collapse, where the generator produces limited variety. WGANs address these issues by using a different loss function based on the Wasserstein distance, also known as Earth Mover's Distance. This approach provides smoother gradients and more reliable convergence. WGANs are particularly useful in generating high-quality images, enhancing machine learning models, and even creating realistic simulations. Understanding WGANs can open doors to advanced AI applications and more robust generative models.

What is Wasserstein GAN?

Wasserstein GAN (WGAN) is a type of Generative Adversarial Network (GAN) designed to improve training stability and generate higher quality images. It uses the Wasserstein distance, also known as Earth Mover's Distance, to measure the difference between the generated data distribution and the real data distribution.

-

WGAN was introduced in 2017 by Martin Arjovsky, Soumith Chintala, and Léon Bottou in a paper titled "Wasserstein GAN."

-

The Wasserstein distance is a metric that calculates the cost of transforming one distribution into another, providing a more meaningful measure for GAN training.

-

WGAN replaces the discriminator in traditional GANs with a critic, which scores the realness of images instead of classifying them as real or fake.

-

The critic in WGAN does not use a sigmoid activation function in the output layer, allowing for a more stable gradient during training.

-

WGAN uses weight clipping to enforce a Lipschitz constraint on the critic, ensuring that the critic function is 1-Lipschitz continuous.

Why Wasserstein GAN is Important

WGAN addresses several issues found in traditional GANs, making it a significant advancement in the field of generative models.

-

WGAN reduces mode collapse, a common problem in GANs where the generator produces limited varieties of outputs.

-

Training stability is improved in WGANs, making it easier to train models without the need for extensive hyperparameter tuning.

-

WGAN provides a meaningful loss metric, which correlates with the quality of generated images, unlike the loss in traditional GANs.

-

The Wasserstein distance used in WGAN is continuous and differentiable almost everywhere, aiding in smoother training.

-

WGAN can be used for various applications, including image generation, data augmentation, and even in fields like drug discovery.

How WGAN Works

Understanding the mechanics of WGAN helps in grasping why it performs better than traditional GANs.

-

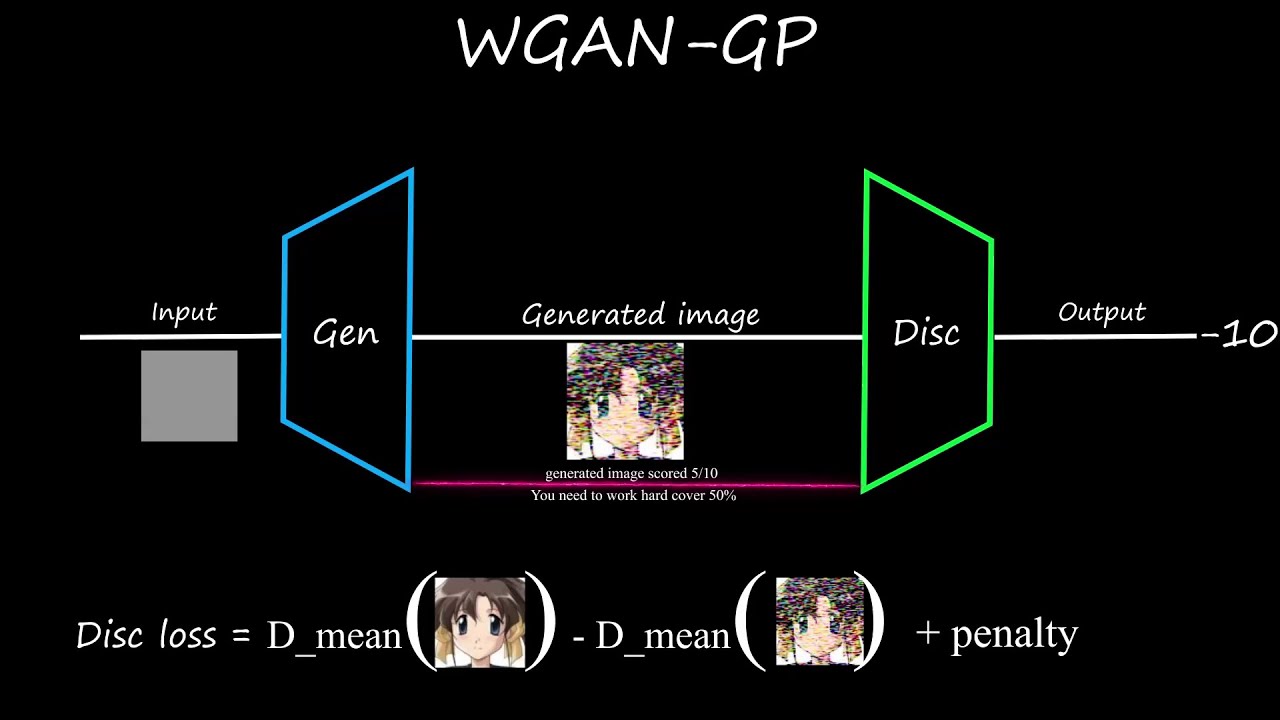

WGAN uses a critic network instead of a discriminator, which scores images rather than classifying them.

-

The generator in WGAN aims to minimize the Wasserstein distance between the generated and real data distributions.

-

Weight clipping in WGAN ensures that the critic function remains within a certain range, maintaining the Lipschitz constraint.

-

The critic's loss function in WGAN is designed to maximize the difference between the scores of real and generated images.

-

WGAN training involves alternating between updating the critic and the generator, similar to traditional GANs but with different loss functions.

Advantages of Wasserstein GAN

WGAN offers several benefits over traditional GANs, making it a preferred choice for many researchers and practitioners.

-

WGAN provides more stable training, reducing the chances of the model collapsing during the training process.

-

The loss metric in WGAN is more interpretable, offering insights into the quality of the generated images.

-

WGAN can handle a wider variety of data distributions, making it versatile for different applications.

-

The critic in WGAN can be trained more effectively, leading to better performance of the generator.

-

WGAN is less sensitive to hyperparameters, simplifying the training process and making it more accessible.

Challenges and Limitations

Despite its advantages, WGAN is not without its challenges and limitations.

-

Weight clipping can be problematic, as it may lead to vanishing or exploding gradients if not handled properly.

-

Training WGAN can be computationally intensive, requiring significant resources for effective training.

-

The Lipschitz constraint imposed by weight clipping can sometimes limit the expressiveness of the critic.

-

WGAN may still suffer from mode collapse, although to a lesser extent than traditional GANs.

-

Implementing WGAN requires careful tuning, especially in terms of weight clipping and learning rates.

Applications of Wasserstein GAN

WGAN has found applications in various fields, showcasing its versatility and effectiveness.

-

WGAN is used in image generation, producing high-quality images for various purposes, including art and entertainment.

-

Data augmentation is another application, where WGAN generates synthetic data to enhance training datasets.

-

WGAN is employed in drug discovery, helping to generate potential drug candidates by modeling complex molecular structures.

-

In the field of finance, WGAN is used to model and predict financial data, aiding in risk management and investment strategies.

-

WGAN is also used in speech synthesis, generating realistic human speech for applications like virtual assistants and automated customer service.

-

WGAN has potential in medical imaging, where it can generate high-quality images for diagnostic purposes, aiding in early detection and treatment planning.

Final Thoughts on Wasserstein GANs

Wasserstein GANs (WGANs) have revolutionized the way we approach generative models. By addressing the limitations of traditional GANs, WGANs offer more stable training and better quality outputs. They use the Wasserstein distance, which provides a more meaningful measure of the difference between distributions, leading to more reliable convergence.

Understanding the importance of the critic network and the role of weight clipping is crucial for anyone diving into WGANs. These elements ensure the model remains within the desired bounds, preventing issues like mode collapse.

WGANs have found applications in various fields, from image generation to data augmentation. Their ability to produce high-quality, realistic data makes them invaluable tools in machine learning and AI research.

Incorporating WGANs into your projects can significantly enhance the quality and reliability of generative tasks. They represent a significant step forward in the evolution of GANs.

Was this page helpful?

Our commitment to delivering trustworthy and engaging content is at the heart of what we do. Each fact on our site is contributed by real users like you, bringing a wealth of diverse insights and information. To ensure the highest standards of accuracy and reliability, our dedicated editors meticulously review each submission. This process guarantees that the facts we share are not only fascinating but also credible. Trust in our commitment to quality and authenticity as you explore and learn with us.